GIGABYTE AI TOP

TRAIN YOUR OWN AI ON YOUR DESK

GIGABYTE AI TOP represents a groundbreaking desktop AI training solution that revolutionizes local model development. Featuring advanced memory offloading technology and support for open-source AI models, it delivers enterprise-grade capabilities with the convenience of desktop computing, enabling both beginners and experts to conduct sophisticated AI training with enhanced data privacy and security.

Win Advantages with GIGABYTE AI TOP

405B Parameter LLM Support

Flexible Upgradability

Local Privacy & Security

Household Power Compatible

Memory Offloading Solution

Intuitive Setup & Control

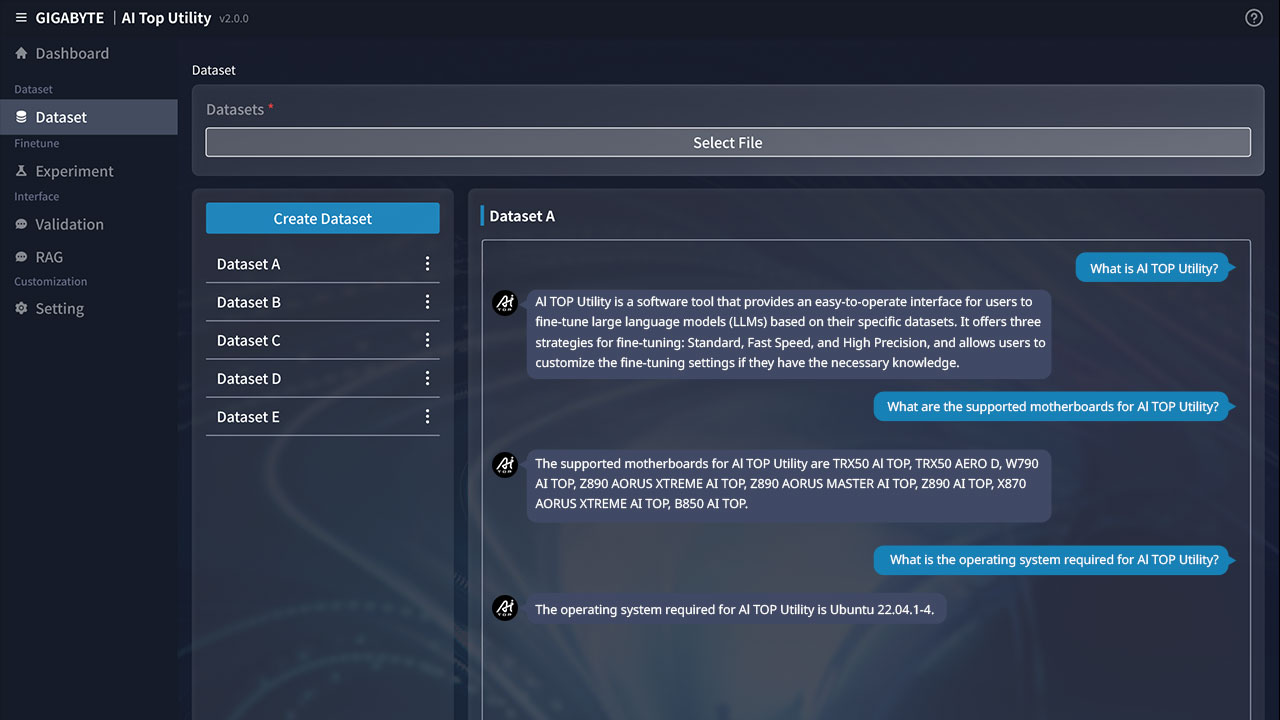

AI TOP Utility

Revolutionize Local AI Training

The AI TOP Utility revolutionizes local AI model training with a user-friendly interface, reinvented workflows, and real-time progress dashboard monitoring. Through advanced memory offloading technology, it supports top open-source AI models, allowing both beginners and experts to train LLM for text generation and LMM for image and video creation. The intuitive dataset creating tools and preset training strategies enable users to start their AI projects without programming expertise.

Get Started Now!

Run the AI TOP Utility on your AI TOP Hardware.

* Available on Linux and WSL2 on Windows 11.

* Please update to the latest BIOS version for AI TOP Motherboards to ensure regular use.

- Download Model: A new feature that helps users automatically download Hugging Face models for training and deployment.

- Mount SSD: A new feature that allows users to automatically mount 1 to 2 NVMe SSDs to offload training memory to SSDs.

- Multinode Optimization: A new feature to reduce VRAM usage and increase model training speed when using multiple GPUs cards or clustering training.

- Bug Fixes: Resolved various issues to improve stability and performance.

AI TOP Hardware

Enterprise-grade AI at Your Desk

The AI TOP Hardware ecosystem integrates GIGABYTE's optimized components designed for AI training workloads. From individual components to complete setup kits and clustering solutions, it delivers enterprise-grade performance while remaining compatible with household power systems.

Optimized Power Efficiency

Ultra Durable For Ai Training

Flexible Configuration

Home Power Compatible

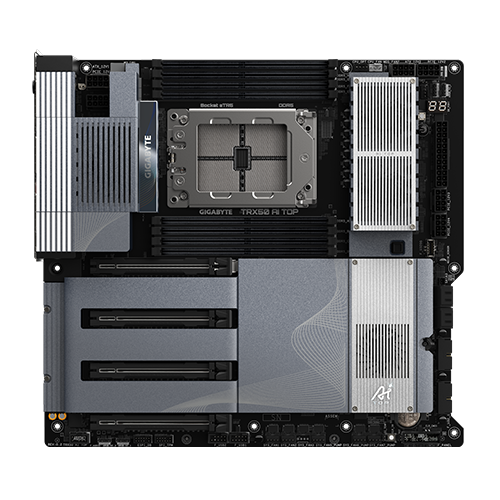

AI TOP Motherboard

Z890/X870E motherboards with dual-GPU and Thunderbolt 5 support, optimized for AI training performance with XMP AI Boost.

Learn More

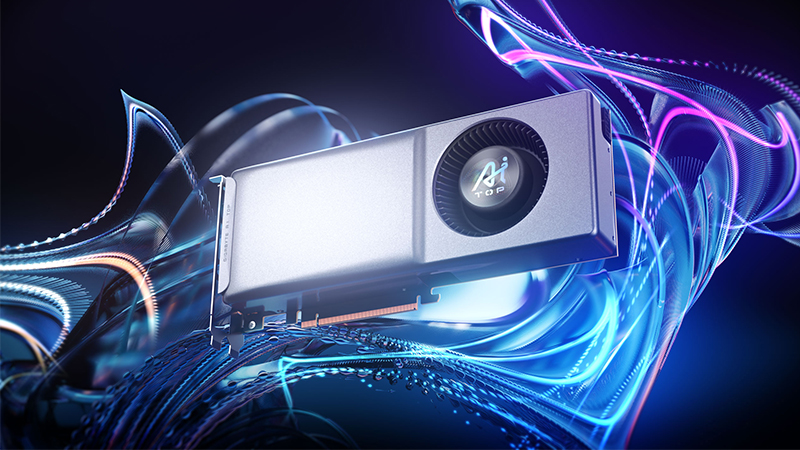

AI TOP Graphics Card

Unleash the full potential of AI Computing with optimal cooling solutions, long-lasting designs, and instant data swapping via VRAM.

Learn More

AI TOP SSD

109,500 TBW endurance with RAID support, 20x more durable than enterprise SSDs for reliable AI training.

Learn More

AI TOP PSU

80 Plus Titanium-certified power supply supporting four PCIe Gen 5 GPUs, with real-time power monitoring.

Learn More

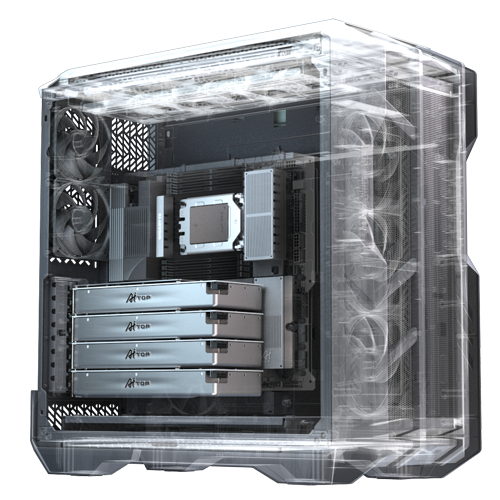

AI TOP Chassis

AORUS C500G and GIGABYTE C701G chassis engineered for optimal heat dissipation in AI workloads.

Learn MoreAI TOP Setup Kits

Essential barebone package with motherboard, PSU, and chassis for local AI training. Connect multiple kits via Ethernet and Thunderbolt for expanded performance.

AI TOP 500

Perfect for medium-sized enterprises in finance, engineering, education, and medical research.

- Support 405B-parameter models

- Up to four 2-slot graphics cards

- Dual PSU support

- AI TOP Clustering ready

AI TOP 100

Ideal for small businesses, startups, postgraduates, and personal projects.

- Support 110B+ model fine-tuning

- Up to two 3-slot graphics cards

- Single PSU configuration

- AI TOP Clustering ready

AI TOP Clustering

Take Your AI Training Further

Connect multiple AI TOP machines via Ethernet or Thunderbolt ports to break through single system limitations. As AI models grow in size and complexity, especially with LMMs, expand your computing capabilities to match your ambitions.

Performance Boost 1.6x Faster

- Up to 1.6x faster training speed with 2-PC setup

- Enhanced memory capacity for larger models

- Efficient workload distribution

Flexible Connectivity

- Thunderbolt 5 (80Gbps) for maximum speed

- 10GbE for long-distance clustering

- Thunderbolt 4 support

AI TOP Recommendations

Choose the Best Setup for Your Usage

Educational Projects AI TOP is perfect for training a small AI model from scratch to have comprehensive understanding of AI model training. |

Individual AI Developers AI TOP is the best tool to fine-tune LLMs with instant results and schedule the training experiment whenever suits you best. |

Small Studios AI TOP is affordable for small studios and provides absolute privacy and security for your proprietary datasets. |

AI Workflows for SMBs AI TOP is the ideal platform with lower TCO for small and medium-sized businesses to deploy AI models to their workflows and improve productivity. |

|

| Best Model Size For Fine-tuning (parameters) | 8 B | 13 B | 30 B | 70 B |

| Training Time of 100K-sample | 288 Hours | 32 Hours | 17 Hours | 15 Hours |

| Recommeded Sets |

|

|

|

|

Performance

High Performance and Efficiency for AI Training

Shorter training times are better. Higher power efficiency is better.

(memory offloading: DRAM+SSD)

(memory offloading: SSD+SSD)

13B

70B

Efficiency

Footnotes:

- PC configs:

- AI TOP PC: TRX50 AI TOP motherboard with Ryzen Threadripper 7985WX processer, 2x RTX 4070 Ti SUPER AI TOP graphics cards, 2TB of system memory, and 2x SSDs of 1TB in RAID 0.

- Regular PC: Z790 AORUS MASTER motherboard with Intel Core i9 13th Gen processor, 1x RTX 4090 graphics card, 64GB of system meory, and 1x SSD of 2TB.

- Training configs: AI TOP Utility 1.0.1, Batch size: 4, Epochs: 1, Finetuning type: Full and LoRA.

- Training time: Calculated from ETA shown on Dashboard in AI TOP Utility. May vary by hardware, system and training configurations.

- Power efficiency: Calculated by measured tokens per watt. May vary by hardware, system and training configurations.

GIGABYTE Advantages

Why GIGABYTE AI TOP?

FAQs

-

What is AI TOP?

Launched at COMPUTEX 2024, AI TOP is a groundbreaking desktop solution for local AI training, fine-tuning, and inferencing. By combining AI TOP Utility software with AI TOP Hardware, it enables users to "Train Your Own AI on Your Desk." The solution supports up to 405B parameter models while delivering enterprise-grade performance on regular household power systems.

-

How can I benefit from using AI TOP?

AI TOP empowers users with comprehensive local AI training capabilities. Key benefits include:

- Train AI models up to 405B parameters

- Ensure complete data privacy and security

- Run on regular household electricity

- Flexible upgrade options and clustering support

- Enhanced features and broader model support since October 2024 update -

What is the AI TOP Utility?

AI TOP Utility revolutionizes local AI training with an intuitive interface for both large language model (LLM) and large multimodal models (LMM) training. It features advanced memory offloading technology, real-time system monitoring, and customizable training strategies for efficient model development.

-

What are the system requirements for the AI TOP Utility?

AI TOP Utility runs on AI TOP Hardware configurations. It supports both Linux and Windows Subsystem for Linux (WSL2) on Windows 11. For a complete list of supported hardware, please check our supported hardware list.

-

What models are supported by the AI TOP Utility?

AI TOP Utility supports leading open-source models for both text generation (LLM) and image/video creation (LMM). It handles various model architectures including the latest 405B-parameter models. For a detailed list of supported models, please refer to the user manual in the software package.

-

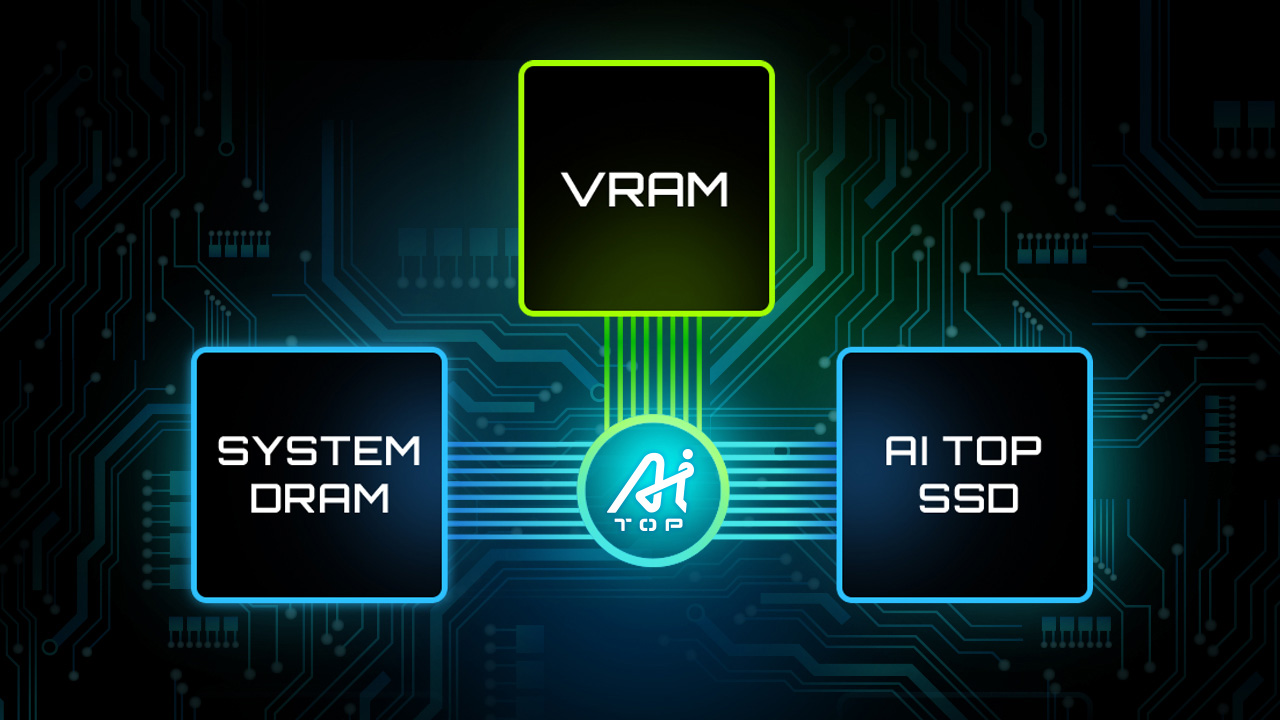

How does Memory Offloading work in AI TOP Utility?

Memory Offloading is a cutting-edge technology that significantly increases the system's capability as in the size of LLMs. It can offload the data generated during the training progress from the VRAM to the System DRAM and/or SSDs according to the current strategy. You can choose the Offloading Memory Strategy based on the LLM you are going to train or fine-tune.

-

What is the AI TOP Hardware?

AI TOP Hardware is a complete ecosystem including motherboards, graphics cards, power supply units, and SSDs, optimized for AI training workloads. Available as individual components or complete setup kits (AI TOP 500/100), it delivers enterprise-grade performance while remaining compatible with household power systems.

-

What makes the AI TOP Hardware different from regular PCs?

AI TOP Hardware features specialized designs for AI workloads, including:

- Support for dual-GPU configuration with Thunderbolt 5

- Advanced thermal design for sustained AI operations

- Ultra-durable SSDs with up to 20x enterprise-grade endurance

- Power supply units supporting up to four PCIe Gen 5 GPUs

- Clustering capability for expanded performance -

How to choose the right configuration of AI TOP Hardware?

AI TOP offers flexible configurations to match different needs:

- AI TOP 500: Designed for medium-sized businesses in finance, engineering, education, and medical research

- AI TOP 100: Perfect for small businesses, startups, postgraduates, and personal projects

Learn more about recommended setups

Explore