Bringing a Whole New Level of Performance and Efficiency to the Modern Data Center

NVIDIA Grace™ CPU Superchip

144 Arm Neoverse V2 Cores

Armv9.0-A architecture for better compatibility and easier execution of other Arm-based binaries.

Up to 960GB CPU LPDDR5X memory with ECC

Balancing between bandwidth, energy efficiency, capacity, and cost with the first data center implementation of LPDDR technology.

Up to 8x PCIe Gen5 x16 links

Multiple PCIe Gen5 links for flexible add-in cards configurations and system communication.

NVLink-C2C Technology

Industry-leading chip-to-chip interconnect technology up to 900GB/s, alleviating bottlenecks and making coherent memory interconnect possible.

NVIDIA Scalable Coherency Fabric (SCF)

NVIDIA-designed mesh fabric and distributed cache architecture for much better bandwidth and scalability.

InfiniBand Networking Systems

Designed with maximum scale-out capability with up to 100GB/s total bandwidth across all superchips through InfiniBand switches, BlueField-3 DPUs, and ConnectX-7 NICs.

NVIDIA CUDA Platform

The well-known platform is optimized for the new Arm-based CPU, enabling accelerated computing with superchips, alongside all add-in cards and networking systems.

Stepping Further into the Era of AI and GPU-Accelerated HPC

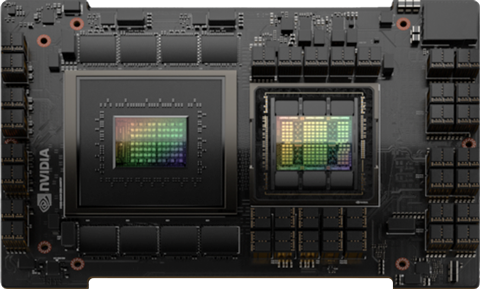

NVIDIA GH200 Grace Hopper Superchip

72 Arm Neoverse V2 Cores

Armv9.0-A architecture for better compatibility and easier execution of other Arm-based binaries.

Up to 480GB CPU LPDDR5X memory with ECC

Balancing between bandwidth, energy efficiency, capacity, and cost with the first data center implementation of LPDDR technology.

96GB HBM3 or 144GB HBM3e GPU memory

Adoption of high-bandwidth-memory for improved performance of memory-intensive workloads.

Up to 4x PCIe Gen5 x16 links

Multiple PCIe Gen5 links for flexible add-in cards configurations and system communication.

NVLink-C2C Technology

Industry-leading chip-to-chip interconnect technology up to 900GB/s, alleviating bottlenecks and making coherent memory interconnect possible.

InfiniBand Networking Systems

Designed with maximum scale-out capability with up to 100GB/s total bandwidth across all superchips through InfiniBand switches, BlueField-3 DPUs, and ConnectX-7 NICs.

Easy-to-Program Heterogeneous Platform

Bringing preferred programming languages to the CUDA platform along with hardware-accelerated memory coherency for simple adaptation to the new platform.

NVIDIA CUDA Platform

The well-known platform is optimized for the new Arm-based CPU, enabling accelerated computing with superchips, alongside all add-in cards and networking systems.

Maximize Configuration Flexibility with the GIGABYTE Server Lineup

Applications for the NVIDIA Grace™ Superchip & GH200 Grace Hopper Superchip

Featured New Products