Tech-Guide

How to Get Your Data Center Ready for AI? Part One: Advanced Cooling

The proliferation of artificial intelligence has led to the broader adoption of innovative technology, such as advanced cooling and cluster computing, in data centers around the world. Specifically, the rollout of powerful AI processors with ever higher TDPs has made it all but mandatory for data centers to upgrade or even retrofit their infrastructure to utilize more energy-efficient and cost-effective cooling. In part one of GIGABYTE Technology’s latest Tech Guide, we explore the industry’s most advanced cooling solutions so you can evaluate whether your data center can leverage them to get ready for the era of AI.

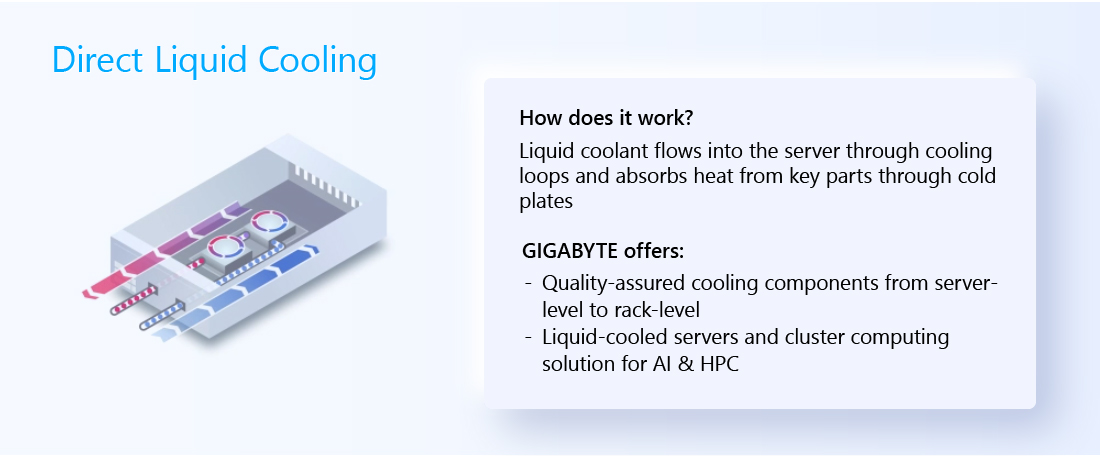

Direct Liquid Cooling (DLC) or Direct-to-chip (D2C) Liquid Cooling

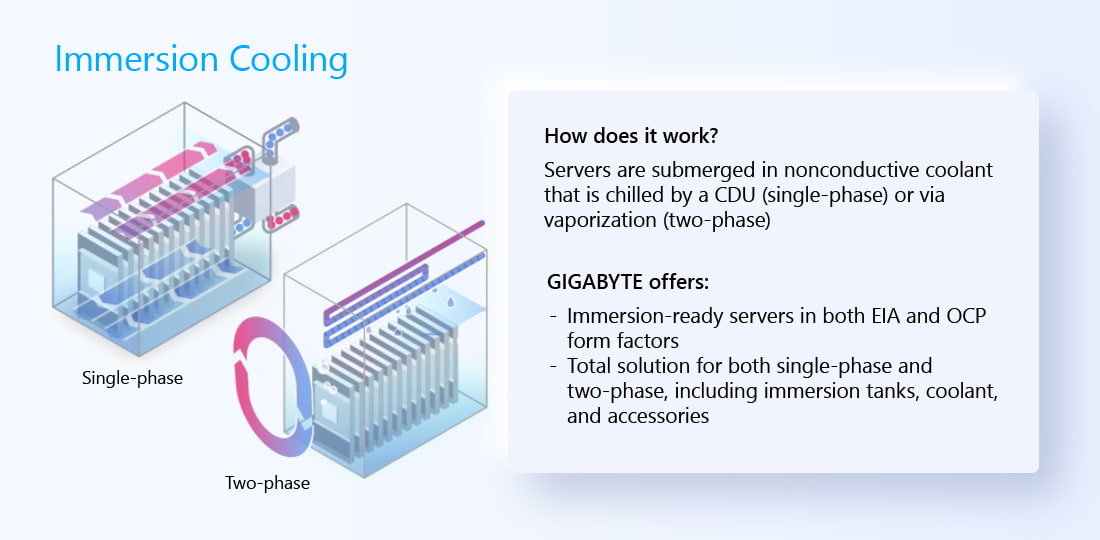

Immersion Cooling: Single-phase and Two-phase

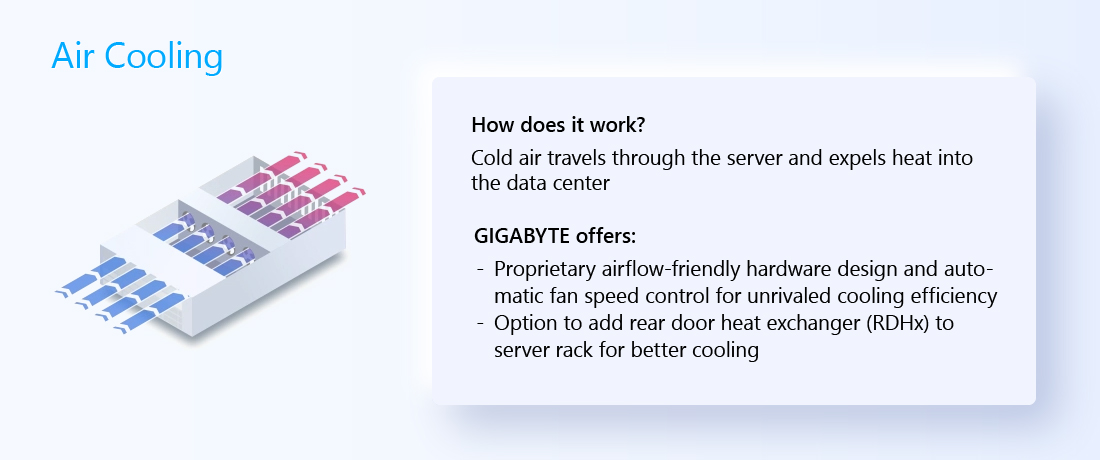

Better Air Cooling with GIGABYTE’s Proprietary Design and RDHx

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

# Immersion Cooling

# Artificial Intelligence (AI)

# Data Center

# Cooling Distribution Unit (CDU)

# HPC

# PUE

# Open Compute Project (OCP)

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates